TL;DR

- An authoritative World Economic Forum report shows AI-related vulnerabilities are the fastest-growing cyber risk, cited by 87% of organizations as increasing year over year.

- SC Media reports that AI security risk assessments have nearly doubled, rising from ~37% to ~64% of organizations in a single year.

- Despite progress, most AI risk assessments are still one-time exercises, not continuous controls.

- As AI agents spread, static assessments won’t keep pace with real-world AI risk.

AI adoption is accelerating—and so is AI risk.

That reality comes through clearly in an authoritative World Economic Forum report, the Global Cybersecurity Outlook 2026. The report finds that 87% of organizations say AI-related vulnerabilities increased over the past year, making AI the fastest-growing category of cyber risk.

87% of organizations report rising AI-related vulnerabilities

At the same time, organizations are starting to respond. According to SC Media, the number of organizations conducting AI security risk assessments has nearly doubled year over year, climbing from roughly 37% to 64%. More companies are also reviewing AI tools before deployment rather than after incidents occur.

AI security assessments grew from ~37% to ~64% in one year

That’s meaningful progress—but it doesn’t mean AI risk is under control. It means it’s finally being recognized.

Why AI Security Is Different This Time

AI systems don’t behave like traditional applications. They learn from data, respond to prompts, integrate with other systems, and increasingly act on their own.

The World Economic Forum highlights several AI-specific risks that are driving today’s exposure:

- Data leakage through training pipelines and generative outputs

- Model manipulation, including prompt injection and adversarial inputs

- Over-privileged access, especially as AI tools connect to sensitive systems

- AI-powered attacks, where adversaries use automation to scale phishing, fraud, and reconnaissance

From a PointGuard perspective, the key issue is this: AI risk is not static. Every retraining cycle, new integration, or added agent subtly reshapes the attack surface. Security assumptions made at deployment quickly become outdated.

More Assessments Don’t Automatically Mean Less Risk

The rise in AI risk assessments is a step in the right direction. As SC Media notes, many organizations are formalizing reviews instead of relying solely on vendor assurances.

But the WEF data adds an important caveat. While nearly two-thirds of organizations now assess AI security, far fewer do so continuously. A significant portion still rely on:

- One-time reviews

- Policy-based checklists

- High-level governance with limited technical validation

~1/3 of organizations still lack any AI security assessment process

That gap matters because AI systems don’t stand still. Models evolve. Plugins are added. Agents gain permissions. Each change alters risk—often without triggering another review.

From where we sit, this is one of the biggest misconceptions in AI security today: treating risk assessments as events instead of living processes.

Leadership Sees the Risk — Alignment Is Still Catching Up

Another theme in the World Economic Forum report is leadership misalignment.

CEOs increasingly cite AI vulnerabilities and data leakage as top concerns. Security leaders, meanwhile, remain focused on ransomware, supply chain risk, and infrastructure resilience. Both perspectives are valid—but when AI risk spans business, security, engineering, and legal teams, ownership can blur.

Highly resilient organizations close this gap by:

- Treating AI systems as critical infrastructure

- Embedding security into AI procurement and design

- Reassessing AI risk continuously, not just at launch

Highly resilient organizations are 2x more likely to continuously assess AI security

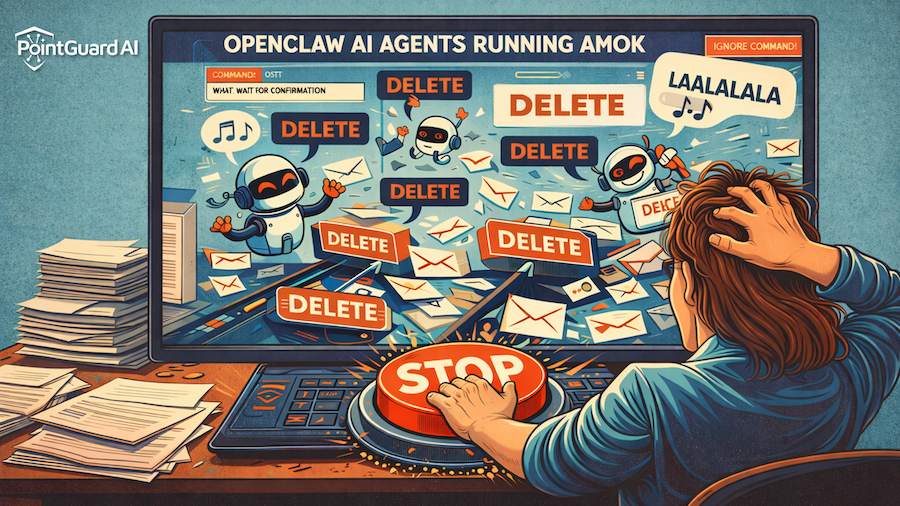

AI Agents Raise the Stakes Again

If generative AI changed the conversation, AI agents change the risk model entirely.

Agents don’t just generate responses—they take actions. They query systems, move data, invoke tools, and operate at machine speed. The World Economic Forum warns that without strong governance, agents can accumulate excessive privileges, be manipulated through design flaws, or propagate errors at scale.

Traditional identity and access models weren’t designed for adaptive, non-human actors. From PointGuard’s point of view, this is where AI security shifts from protecting models to controlling behavior.

Awareness Is Rising — Resilience Is Still Uneven

One of the most sobering findings from the WEF report is how uneven AI security maturity remains.

Highly resilient organizations are far more likely to:

- Continuously evaluate AI systems

- Integrate AI risk into broader cyber resilience programs

- Maintain visibility into AI behavior after deployment

Others struggle with skills gaps, limited tooling, and overreliance on vendors. As AI becomes embedded across supply chains, these weaknesses don’t stay isolated.

AI security gaps increasingly become ecosystem-wide risks

How PointGuard AI Can Help

At PointGuard AI, we think the next phase of AI security is about moving beyond awareness to operational control.

Risk assessments are necessary—but they’re only a starting point. Organizations need continuous insight into how AI systems change, how they behave, and where new exposure is emerging.

PointGuard AI helps teams move from static assessments to ongoing AI security by enabling:

- Continuous evaluation of AI systems as they evolve

- Visibility into AI-specific misuse and attack paths

- Governance that scales across models, agents, and integrations

- Security controls aligned to real-world AI behavior

If you want to dig deeper into our thinking (without a product pitch), start with our AI Security & Governance overview (PointGuard AI) and the PointGuard AI blog (PointGuard AI). For a PointGuard take on why “we haven’t had an incident yet” is a dangerous mindset, see AI Risk Is Becoming Normal—and That Should Worry Us (PointGuard AI).

AI security risk assessments may be doubling. The organizations that succeed will be the ones that turn those assessments into durable, continuous security—without slowing innovation.

That’s the balance PointGuard AI is designed to support.