AI agents are rapidly moving from experimental tools to production-critical systems. Unlike traditional software, agents can perceive their environment, reason over data, make decisions, and take action—often with varying degrees of autonomy. This shift enables powerful new use cases, but it also introduces a new class of security risks that many organizations are not yet prepared to manage.

Research organizations such as the Stanford Human-Centered AI Institute and MIT CSAIL have highlighted how agentic systems differ fundamentally from conventional applications, particularly in how they interact with tools, data, and users. Not all AI agents are created equal, and understanding these differences is essential for building effective security controls.

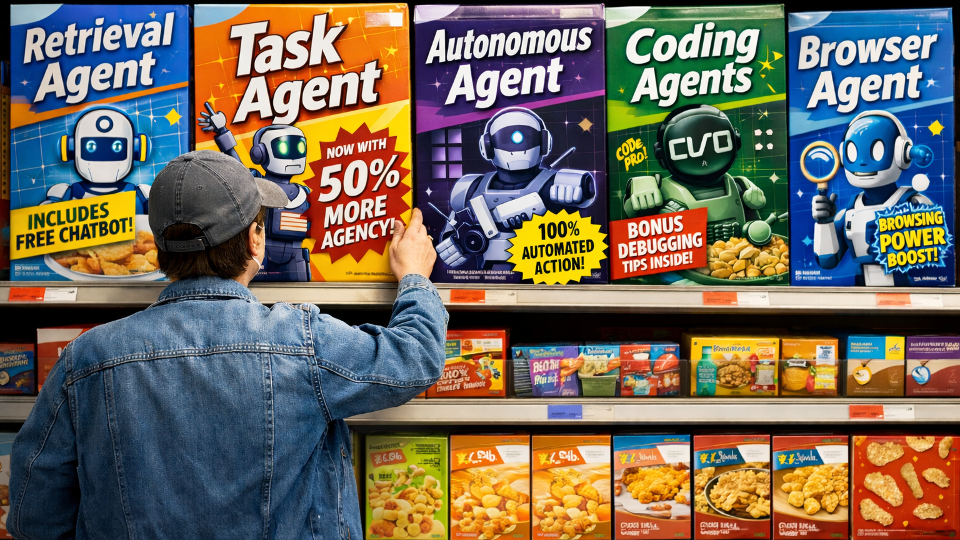

Here are five common types of AI agents, how they function, and the specific security challenges they introduce.

Retrieval Agents: Knowledge Access with Hidden Risk

Primary function: Fetching and analyzing information from internal or external knowledge bases

Autonomy level: Low to medium

Retrieval agents are often the first step organizations take into agent-based AI. These agents power question-and-answer systems, copilots, and search experiences using techniques like Retrieval-Augmented Generation (RAG), a pattern widely discussed in open research such as this overview from Meta AI.

While retrieval agents may appear low risk due to their limited autonomy, they introduce serious concerns around data exposure and access control. Academic research has demonstrated how prompt injection attacks can manipulate retrieval pipelines, causing models to surface unintended or sensitive information.

Additional risks include data poisoning—where untrusted content is inserted into knowledge sources—and a lack of visibility into what data is being retrieved at runtime. Without governance, retrieval agents can quietly become a major source of sensitive data leakage.

Task Agents: Automation at Scale—and Speed

Primary function: Executing predefined, structured tasks

Autonomy level: Low

Task agents execute clearly defined workflows such as provisioning access, processing tickets, or triggering operational actions. While similar to traditional automation, AI-based task agents introduce more flexible decision logic and contextual reasoning.

The main security challenge lies in over-privileged access. Task agents often require API keys, credentials, or system permissions to perform their duties. If these privileges are too broad, a compromised agent can carry out unauthorized actions at machine speed.

Security researchers have long warned about automation abuse and privilege escalation in software-driven workflows, a concern echoed in guidance from organizations like NIST. Even low-autonomy agents can cause large-scale damage if inputs are manipulated or safeguards are insufficient.

Autonomous Agents: Maximum Power, Maximum Risk

Primary function: Independently planning, adapting, and executing complex objectives

Autonomy level: High

Autonomous agents represent the most advanced—and risky—form of agentic AI. These systems can set goals, decompose them into steps, adapt to changing inputs, and operate with minimal human oversight.

The security challenge with autonomous agents is unpredictability at scale. These systems may chain actions across multiple tools, APIs, and data sources in ways that were never explicitly programmed. This creates risk around unintended actions, policy violations, and cascading failures.

Traditional security controls, which assume deterministic behavior, are poorly suited to managing systems that learn and adapt in real time. Without continuous monitoring and enforcement, autonomous agents can unintentionally bypass controls or optimize for goals in ways that conflict with organizational policies.

Coding Agents: Accelerating Development, Expanding Attack Surfaces

Primary function: Code generation, debugging, testing, and review

Autonomy level: Medium to high

Coding agents are transforming how software is built. They can generate new code, refactor existing codebases, and assist with testing and deployment. While productivity gains are well documented, so are the risks.

Studies such as this research from Stanford University show that AI-generated code can introduce vulnerabilities, insecure dependencies, or logic flaws if not carefully reviewed. Coding agents also often require access to source repositories, CI/CD pipelines, and secrets—making them high-value targets.

Perhaps most concerning is the software supply chain risk. A compromised coding agent can introduce malicious logic directly into production systems, bypassing traditional security review processes.

Browser Agents: The Web as an Attack Vector

Primary function: Automating actions within web browsers

Autonomy level: Medium

Browser agents interact with websites much like humans do—navigating pages, filling forms, scraping data, and invoking APIs. This makes them powerful, but also highly exposed.

By design, browser agents operate in untrusted environments. Security researchers have shown how malicious web content can manipulate AI behavior through prompt-based attacks embedded in pages, as described in this analysis by the Carnegie Mellon Software Engineering Institute.

Browser agents often handle credentials, session tokens, and sensitive data. Without isolation, monitoring, and runtime controls, a single compromised browsing session can lead to credential theft or unauthorized access to internal systems.

How PointGuard AI Helps Secure Agentic Systems

As AI agents become more capable and autonomous, security must evolve beyond static controls and pre-deployment reviews. PointGuard AI is designed specifically to secure AI and agentic systems throughout their operational lifecycle.

PointGuard AI’s Agentic Security capabilities provide real-time visibility into agent behavior, enabling organizations to enforce policies, prevent unsafe actions, and detect anomalous decision-making as it occurs.

Combined with PointGuard AI’s core AI capabilities for AI discovery, security posture management, red teaming, runtime guardrails, and policy enforcement, organizations gain a unified platform to secure everything from simple retrieval agents to fully autonomous systems.

As enterprises adopt retrieval, task, autonomous, coding, and browser agents, agentic security is no longer optional. With PointGuard AI, organizations can innovate confidently—while keeping AI agents safe, accountable, and under control.