While the AI revolution is upon us, there is considerable evidence that security remains both a concern and blocker for many AI initiatives. That is borne out in the 2025 McKinsey Global Survey on AI reveals that while enterprise adoption of AI is accelerating, security concerns are becoming significant barriers to scaling these technologies. As organizations increasingly embed AI into core operations, they face a complex landscape of cybersecurity threats, regulatory scrutiny, and trust challenges that must be addressed to realize AI’s full potential.

Escalating Security Risks in AI Adoption

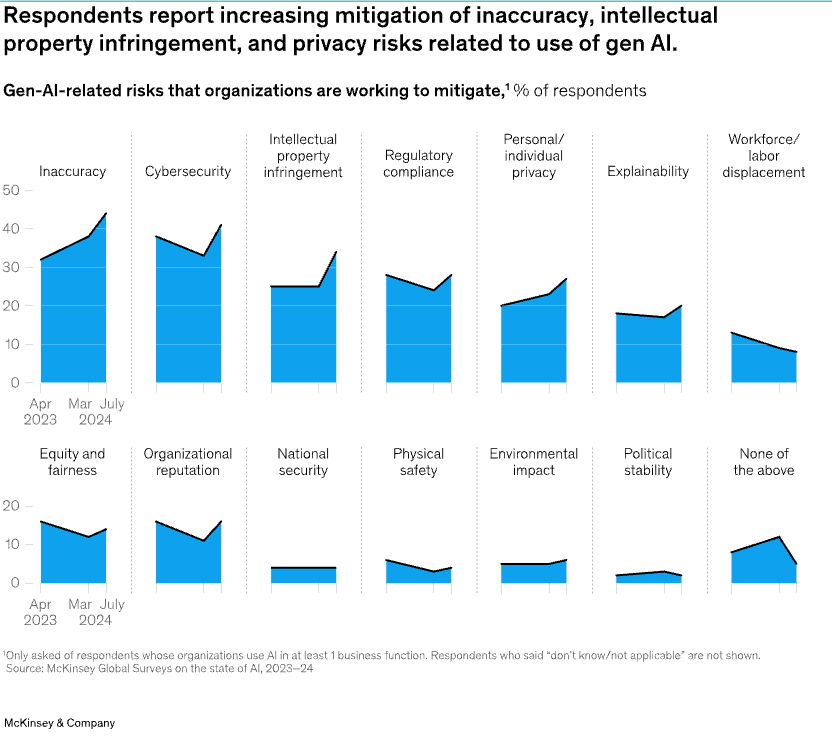

Security has emerged as a critical concern as AI capabilities grow more powerful and embedded. The McKinsey survey indicates that organizations are increasingly taking steps to mitigate gen-AI-related risks such as inaccurate outputs, intellectual property (IP) infringement, data privacy violations, and hallucinations. However, the depth and consistency of these mitigation strategies vary widely.

For instance, while many organizations have introduced human review of gen AI outputs before they are used externally, the rigor of that review varies. Some companies require full human validation, while others perform only partial or ad hoc reviews. This inconsistency suggests that even as awareness of AI risks grows, implementation of safeguards is uneven, particularly at scale.

Cybersecurity remains a top concern. AI expands the enterprise attack surface by introducing new dependencies on data pipelines, third-party APIs, and model behavior. Yet only a subset of organizations has established protocols for ongoing monitoring of these systems, making many AI deployments vulnerable to adversarial manipulation, model theft, or data leakage. Larger enterprises tend to be more proactive, but industry-wide, the accuracy, explainability, and trustworthiness of AI outputs remain unresolved challenges.

Interpreting Risk Mitigation Trends in Generative AI

A striking data visualization in the McKinsey report illustrates how organizations are approaching gen AI risk mitigation. The chart tracks adoption of ten key mitigation strategies across three periods—April 2023, March 2024, and July 2024—highlighting both progress and persistent gaps.

Most Common Safeguards: Human Review and Guidelines

The most widely adopted safeguards include:

- Human review of AI-generated content before use

- Clear internal guidelines for gen AI use

- Restriction of certain high-risk use cases

These practices show steady, if modest, growth across the time periods. For example, human review adoption climbed from 35% to over 45%, while usage guidelines increased incrementally. This reflects a broader trend of organizations acknowledging the need for governance—even if it’s not yet deeply embedded into operational workflows.

Neglected Practices: Monitoring, Testing, and Impact Assessment

On the other hand, more technical and forward-looking controls lag far behind. Fewer than 15% of respondents reported implementing:

- Output testing for harmful or toxic content

- Continuous monitoring of AI systems in production

- Environmental or social impact assessments of AI use

This disparity highlights a critical risk: while surface-level controls are becoming common, foundational practices that ensure long-term AI reliability and accountability are being overlooked. Many organizations may be underestimating the operational and reputational risks posed by bias, hallucination, or drift in production AI models.

Security Implications and Readiness Gaps

Overall, the chart underscores a reactive posture toward AI risk. Most organizations are still in the early stages of integrating robust safeguards across the AI lifecycle. While progress is encouraging, it also emphasizes the need for tools and frameworks that can automate, scale, and enforce AI security policies consistently across functions and teams.

Organizational Responses to AI Security Challenges

To address these risks, leading organizations are beginning to implement dedicated AI governance strategies. Notably, McKinsey found that CEO involvement in AI oversight strongly correlates with higher bottom-line impact from gen AI initiatives. This suggests that when security, governance, and ethical considerations are driven from the top, enterprises are better positioned to operationalize AI safely and effectively.

In addition to leadership alignment, companies are establishing cross-functional teams to drive gen AI deployment, while introducing role-based training, internal feedback loops, and clearer data policies. These steps are critical in building AI systems that users can trust, and regulators can approve.

Still, governance maturity varies dramatically. Some sectors—like financial services and healthcare—are further along due to strict compliance environments. Others, particularly in manufacturing and logistics, are just beginning to grapple with the full risk spectrum. Across the board, security remains both a foundational necessity and a potential competitive differentiator.

Addressing Security Gaps with PointGuard AI

As security challenges around AI adoption intensify, forward-looking organizations are turning to purpose-built platforms like PointGuard AI—formerly known as AppSOC—to strengthen the resilience of their AI ecosystems. PointGuard AI provides a comprehensive approach to securing both AI systems and the applications they power, closing critical security and governance gaps that traditional tools often miss.

The platform is uniquely designed to detect, prevent, and respond to AI-specific threats, including prompt injection, model drift, shadow models, and adversarial attacks. It also protects the broader infrastructure by securing APIs, enforcing real-time policy controls, and monitoring for anomalous behavior across pipelines.

Crucially, PointGuard AI extends beyond technical defense to support governance, explainability, and compliance. The platform provides tools for audit logging, access control, and responsible AI assessments—all of which are vital for meeting the increasing expectations of regulators and enterprise customers.

Its integration-first architecture also ensures seamless deployment into existing DevSecOps pipelines, enabling teams to embed security directly into the AI lifecycle without slowing development. Whether organizations are building gen AI apps or deploying predictive ML models, PointGuard AI offers a unified layer of protection that scales with innovation.

Conclusion: Trust and Security as the Foundation of AI at Scale

The McKinsey State of AI report paints a clear picture: while organizations are excited by the value of generative AI, security-related concerns are holding back widespread deployment. Whether it’s lack of robust oversight, inadequate monitoring, or uneven risk mitigation practices, many enterprises remain unprepared for the security demands of AI at scale.

But this gap is also an opportunity. Enterprises that adopt comprehensive AI security solutions—like PointGuard AI—will be better positioned to build trust, reduce risk, and accelerate innovation. In a landscape where AI performance, privacy, and reliability are under constant scrutiny, embedding security into the core of AI strategies is no longer optional—it’s essential.

As organizations move from experimentation to industrial-scale deployment, security will define the pace and sustainability of AI success. The leaders of tomorrow are acting today to secure that future.