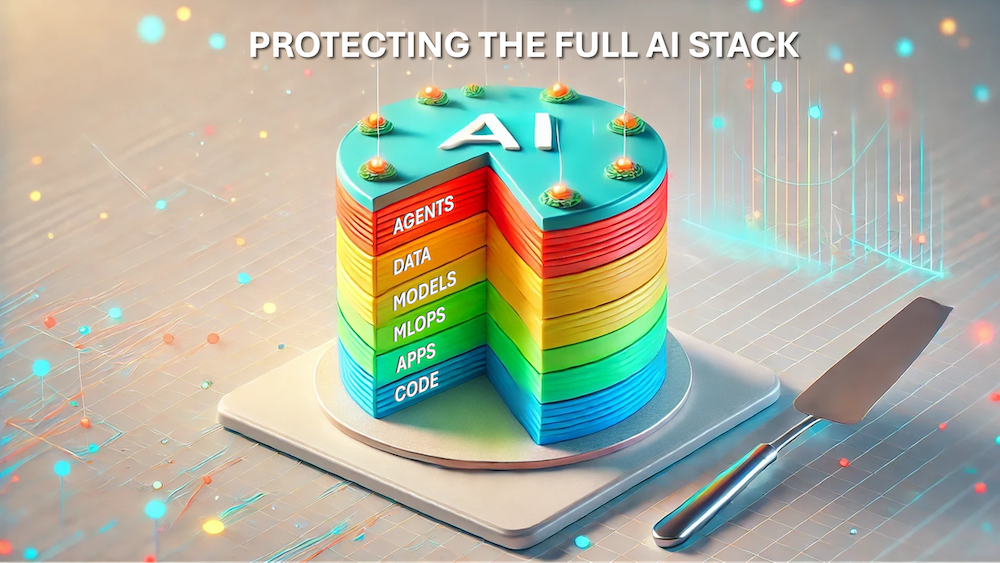

AI is accelerating innovation across every industry, but it’s also introducing unprecedented risk. As more AI models, agents, and pipelines enter production, security and governance have struggled to keep pace — especially when AI components are hidden deep within source code or silently operating across fragmented MLOps platforms.

To help organizations eliminate these blind spots, PointGuard AI is expanding its platform to deliver AI discovery and threat correlation across the entire AI development lifecycle — including source code repositories, MLOps pipelines, and the full range of AI resources such as models, datasets, notebooks, and agents.

This is the first solution to give enterprises a unified, end-to-end view of their AI risk landscape — and the tools to take action and mitigate risks.

Connecting the Dots Across Source Code, Pipelines, and AI Resources

Until now, most AI security tools have focused narrowly on deployed models or isolated segments of the MLOps process. But real-world threats don’t respect those boundaries. An AI model embedded in a GitHub repo, a vulnerable model pulled into a notebook, or a prompt injection slipped into a shared code snippet can all become attack vectors long before a model is deployed.

PointGuard’s latest capabilities extend deep into the development stack to uncover hidden and often overlooked AI components. The platform now scans source code repositories like GitHub to detect:

- AI models

- Datasets and training pipelines

- Notebooks, API calls, and libraries

- Prompts, prompt injection patterns, and agent definitions

- RAG and MCP calls

- External application and data source connections

Every discovered asset is automatically inventoried, assessed for supply chain and regulatory risk, and integrated with existing governance and protection tools — including runtime guardrails, AI posture management, and automated red teaming.

This level of cross-channel visibility allows organizations not only to detect threats earlier but also to correlate them across environments. For example, PointGuard can identify if a vulnerable model found in code is linked to an agent with excessive access privileges, or if a dataset used in training is tied to personally identifiable information (PII) violations in production.

Real-World Threats Are Already Here

The risks of unmanaged AI components are no longer hypothetical. A recent IBM survey revealed that 13% of organizations have already experienced breaches involving AI models, and 97% of those lacked basic access controls.

In live environments, attackers have already exploited these gaps — inserting prompt injections into code to trick AI agents into deleting infrastructure, leaking credentials, or escalating privileges. These attacks often originate in early development phases, where traditional AppSec tools can’t detect them and AI governance platforms haven’t been applied.

By extending visibility and threat detection to the code layer, PointGuard helps security teams stop these threats before they propagate downstream — giving them the power to shift AI security left and regain control over fast-moving AI initiatives.

From Discovery to Governance — Full Lifecycle Protection

PointGuard’s platform was already trusted to secure AI pipelines on platforms like Databricks, Azure, AWS, and GCP — providing visibility into models, endpoints, datasets, and runtime environments.

With this new release, the platform now provides coverage from the earliest moments of development all the way through deployment and operations. It allows teams to:

- Discover all AI assets — including those embedded in source code

- Detect misconfigurations, ungoverned tools, and emerging threats

- Correlate risks across pipelines, environments, and identity boundaries

- Enforce policies and guardrails in real time

- Demonstrate compliance with frameworks such as the NIST AI RMF, EU AI Act, and OWASP Top 10 for LLMs

This comprehensive visibility is especially critical in enterprises where multiple teams — often working in different clouds or across business units — are building and deploying AI at speed. Without a unified platform, there’s no way to connect risk signals across silos or ensure consistent governance.

PointGuard eliminates that fragmentation by serving as the central control plane for AI security.

Built for Security and Innovation

“Our goal is to enable AI innovation — not hinder it,” said Pravin Kothari, Founder and CEO of PointGuard AI. “But that requires visibility, security, and governance across the entire AI lifecycle. By extending our discovery and threat correlation capabilities to code repositories, we’re exposing and securing AI tools before they become embedded in enterprise applications.”

The expanded platform also supports hybrid deployment options — including on-prem, cloud, or mixed environments — giving organizations the flexibility to secure sensitive AI agents, models, and chatbots wherever they run.

Whether you're a security leader trying to assess AI risk, a compliance team navigating new regulations, or a developer building AI features into your product, PointGuard now provides a single, unified solution to help you move faster — and more securely.

The First Step Toward True AI Security

As AI adoption accelerates, organizations are under pressure to balance innovation with responsibility. But without knowing where AI is being used — and how it’s configured — that’s impossible. By delivering AI discovery and threat correlation across source code, pipelines, and AI resources, PointGuard is helping security and governance teams finally get ahead of the AI security curve.

To learn more about how PointGuard AI can secure your AI initiatives from development to deployment, get in touch or request a demo.