Breakthroughs in AI now arrive weekly, reshaping how we build and deploy software. But the security needed to protect these systems isn’t advancing nearly as quickly.

This week delivered a major wake-up call: researchers from Oligo Security uncovered ShadowMQ—a set of severe vulnerabilities across widely used inference frameworks, including Meta’s Llama, Nvidia’s TensorRT-LLM, and Microsoft’s Sarathi-Serve. These flaws enable remote code execution (RCE) in tools trusted by thousands of enterprises.

But buried in the research was a critical detail with bigger implications for the future of AI security: the exploitation of the Model Context Protocol (MCP).

At PointGuard AI, we see this as a turning point. We’re moving from “secure the chatbot” to “secure the agent.” To do that, we need to understand how these systems were compromised—and how to prevent it.

Inside the Attack: ZeroMQ and Insecure Deserialization

The core issue in the ShadowMQ vulnerabilities comes down to a classic problem: unsafe deserialization.

Many AI inference frameworks use ZeroMQ (ZMQ) for high-speed communication between components like schedulers and GPU workers. To pass complex objects easily, developers relied on Python’s pickle module. But pickle is inherently unsafe: if an attacker can send malicious serialized data, they can execute arbitrary code when the server unpacks it.

Oligo researchers found many ZMQ sockets exposed to the open internet with no authentication—essentially leaving the AI cluster’s front door wide open.

But the truly alarming risk shows up when this foundation collides with popular developer tools—specifically the Model Context Protocol.

The MCP Vector: When the Bridge Becomes the Trap

The Model Context Protocol is quickly becoming an industry-standard way to let AI agents access tools and data. MCP is the connective layer powering AI copilots inside IDEs like Cursor or VS Code—enabling agents to read GitHub repos, query databases, or analyze Slack history.

It’s a powerful mechanism—and also a dangerously attractive target.

The ShadowMQ research showed how attackers could register a rogue local MCP server that an IDE implicitly trusted. Through that connection, they bypassed browser controls, injected JavaScript, harvested credentials, and exfiltrated them.

In enterprise environments, this is devastating:

1. Trust Inheritance

AI agents often run with elevated privileges. When MCP is compromised, attackers inherit those privileges.

2. Invisible Data Exfiltration

MCP traffic looks like normal “context gathering,” making malicious transfers blend in.

3. AI Supply Chain Poisoning

Developers often install MCP servers from public repositories. One compromised package could backdoor an entire engineering org.

This is no longer about prompt injection making a chatbot misbehave. This is about full compromise of developer workflows and enterprise data.

Why Traditional Security Tools Miss This

SAST, DAST, WAFs, and traditional AppSec tools weren’t built for the world of agentic AI.

AI agents break old security assumptions:

- Dynamic logic: Agents decide their own steps; workflows can’t be pre-modeled.

- New protocols: ZMQ and MCP don’t resemble typical web traffic.

- Opaque payloads: Serialized objects or embeddings look like noise to traditional firewalls.

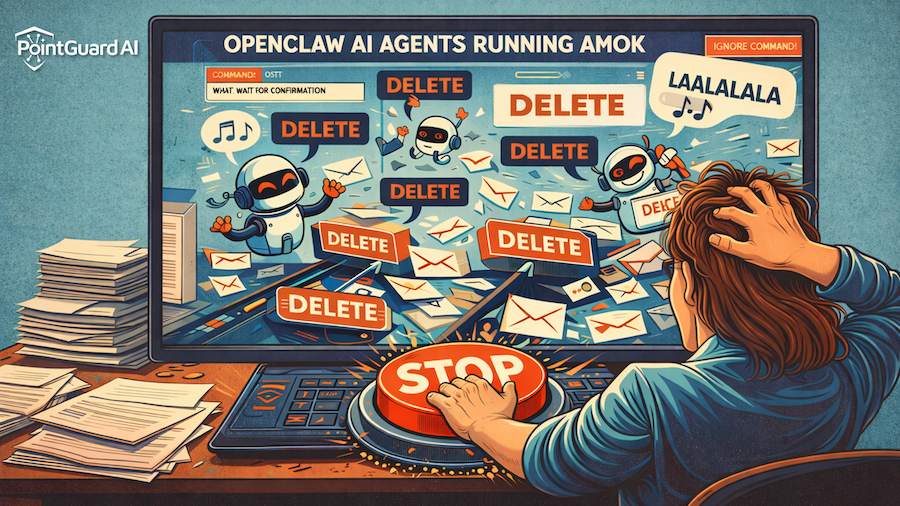

We’re entering the Agentic Era, where software writes software and AI systems communicate automatically. In this new world, security must move from gatekeeping at the perimeter to runtime protection embedded in the workflow.

How PointGuard AI Secures the MCP Layer

This emerging threat landscape is exactly why we built PointGuard AI. As AI shifted from predictive systems to autonomous agents, we recognized the attack surface was shifting—from the model to the context around it.

Here’s how we address the ShadowMQ-class threats identified in the research:

1. Deep Visibility Into MCP Traffic

You cannot secure what you cannot see.

PointGuard AI automatically discovers MCP connections—mapping every agent, tool, server, and data source in your environment. If a developer spins up a rogue MCP server or an IDE connects to an unknown endpoint, PointGuard flags it immediately.

2. Runtime Defense & Anomaly Detection

The RCE vulnerability exploited insecure deserialization. PointGuard provides a real-time defense layer:

- Deserialization Protection: Detects and blocks unsafe object-loading behavior.

- Behavioral Guardrails: If a coding assistant suddenly tries to open a network socket or spawn a shell, we identify and block it as abnormal.

We treat the AI stack like a living system—with an “immune response” to malicious behavior.

3. Policy Enforcement for Agent Tools

PointGuard allows enterprises to enforce Least Privilege for Agents. Teams can:

- Whitelist known-good MCP servers

- Block unknown or rogue tools

- Enforce granular permissions across agents and data sources

This limits blast radius and prevents privilege inheritance when a single MCP endpoint goes rogue.

4. Supply Chain Security for AI Components

Inference engines, MCP servers, and agent frameworks must be scanned like any other software.

PointGuard integrates into CI/CD pipelines to detect:

- Known vulnerabilities (like those used in ShadowMQ)

- Unsafe configurations

- Compromised model weights or agent definitions

We ensure organizations don’t ship or deploy vulnerable versions of Llama, TensorRT-LLM, or Sarathi-Serve unnoticed.

The Path Forward: Innovation Without Fear

ShadowMQ shouldn’t cause organizations to abandon AI. The affected tools—vLLM, Llama, MCP—are powerful and transformative. But they need a security layer equal to their impact.

PointGuard AI was built for this moment.

In basketball, the point guard controls the flow of the game and ensures the defense is ready. That’s our role in the enterprise AI ecosystem: managing data flows, securing agent actions, and defending against threats traditional tools can’t see.

As we move deeper into 2026, attacks will grow more sophisticated. Rogue agents, poisoned context, and protocol exploits will become the norm.