Software supply chains have never been more complex—or more critical. The shift to cloud-native development, the widespread use of open-source components, and the velocity of DevOps have introduced immense benefits to speed and innovation. But they’ve also introduced systemic risks that we’ve only begun to understand. The breaches of SolarWinds and Log4j were not isolated incidents—they were stark reminders that modern software is rarely built from scratch. Instead, it’s assembled from a complex mesh of third-party libraries, APIs, containers, and cloud services.

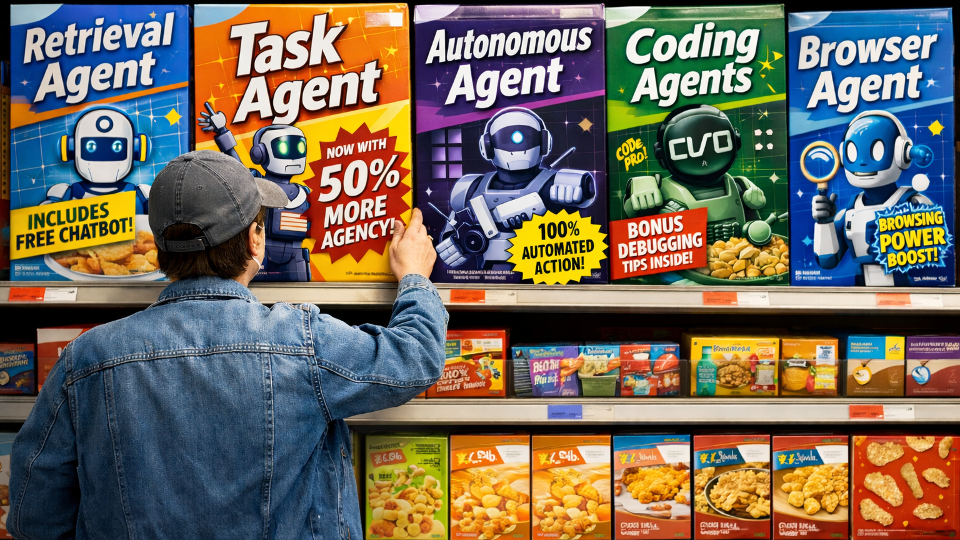

Now, the next big disruptor—AI—is compounding that complexity. Generative AI models, machine learning pipelines, and open-source AI tools are flooding into the enterprise application stack. While these tools promise unprecedented gains in productivity and functionality, they also introduce new risks that most organizations are ill-prepared to manage.

From SBOM to AI-BOM: Expanding the Security Lens

To defend against software supply chain attacks, the cybersecurity community has rallied around the concept of the Software Bill of Materials (SBOM)—a detailed inventory of components that make up a given software artifact. An SBOM helps teams trace vulnerabilities, monitor licensing issues, and assess the origin and reputation of included packages.

AI, however, requires us to think beyond the SBOM. AI development introduces an entirely new layer of components: pre-trained models, training datasets, MLOps platforms, experimentation notebooks, GPU clusters, inference endpoints, and more. Each of these represents a potential attack surface. Many of them come from public or community-driven repositories with minimal vetting or provenance tracking.

The idea of an AI-BOM—an Artificial Intelligence Bill of Materials—is emerging to complement the SBOM. An AI-BOM expands the traditional scope of software inventory to include every component of the AI development lifecycle. It must track where a model came from (e.g., Hugging Face, GitHub, or an internal experiment), who has modified it, what datasets were used, and which downstream applications are consuming its outputs.

Without this visibility, organizations can’t know what they’re running, let alone secure it.

AI Models: Power Tools or Trojan Horses?

The democratization of AI has enabled developers to rapidly prototype with powerful large language models (LLMs) and transformers. Open repositories like Hugging Face host nearly two million models—an incredible resource, but also an attractive delivery vector for attackers. As highlighted in our blog, “Hugging Face Has Become a Malware Magnet”, these models can be poisoned, jailbroken, or even deliberately backdoored.

LLMs don’t exist in isolation—they’re embedded into applications, often with privileged access to backend systems, APIs, databases, and user input. This makes them a new kind of supply chain dependency. Yet, unlike open-source libraries, there is currently no universal standard for vetting or continuously monitoring AI models in production environments.

Attackers know this. Prompt injection attacks can manipulate AI agents into executing harmful commands. Jailbreaking techniques can bypass alignment safeguards. And as generative models are increasingly linked with code generation and automation, the stakes are rising. An LLM embedded in a customer service tool, or a development IDE isn’t just a risk to productivity—it’s a potential liability for data exfiltration, privacy violations, or business disruption.

The Illusion of Separation: AI Is Still Software

A common misconception is that AI security is an entirely new field that requires completely new tools. While some elements are indeed novel, many of the risks AI introduces are deeply tied to conventional software vulnerabilities. AI doesn’t float above your tech stack—it’s embedded within it.

AI workloads still run on servers, containers, and APIs. They rely on standard HTTP endpoints, operate within IAM roles, and store outputs in traditional databases. This means that once an AI component is compromised—via prompt injection, model misbehavior, or training data tampering—the attacker can use that as a pivot point to exploit known vulnerabilities in underlying systems.

We’re already seeing examples of this in practice. AI-generated phishing attacks are more personalized and convincing than ever. Attackers can use LLMs to automate reconnaissance or craft convincing emails, but their ultimate goal remains the same: gain access to internal systems, steal data, hold assets for ransom, or sabotage operations.

Why Existing Tools Aren’t Enough

Legacy vulnerability scanners and endpoint protection platforms weren’t built with AI pipelines in mind. They can’t analyze model behavior, assess training dataset risks, or monitor real-time prompt interactions. Meanwhile, enterprise security teams are often unaware of the full scope of AI experimentation already happening within their organizations.

Shadow AI is real—and it’s growing. Engineers are embedding open-source models into prototypes, deploying them into cloud functions, or connecting them to sensitive systems without centralized oversight. Without discovery tools tailored for AI components, organizations are flying blind.

Security teams need new capabilities that extend into the AI development and runtime lifecycle. This means:

- Discovering all models and AI components across cloud environments and developer workspaces

- Tracking data lineage and model provenance

- Assessing models for adversarial robustness, misalignment, and behavior manipulation

- Monitoring AI interactions in real time to detect misuse, leakage, or unsafe outputs

- Integrating AI-specific signals with broader security telemetry to “connect the dots”

How PointGuard AI Secures the Application Supply Chain

PointGuard AI is purpose-built to bring security visibility and control to the AI era. Recognizing that AI is now a first-class component of the application supply chain, PointGuard enables organizations to discover, monitor, and secure every layer of AI integration.

Explore more of our blogs:

- What We Do in the (AI) Shadows

- Demystifying AI TRiSM: A Deep Dive into Gartner’s AI TRiSM Technology Pyramid

- Testing the DeepSeek-R1 Model: A Pandora’s Box of Security Risks

1. Automated AI Asset Discovery

PointGuard continuously scans cloud environments, developer tools, and code repositories to identify all AI models, datasets, and services in use—whether commercial or open-source. This provides the foundation for a complete AI-BOM that aligns with existing SBOM efforts.

3. Red Teaming and MLOps Hardening

PointGuard conducts automated adversarial testing to simulate how models respond to jailbreak attempts, malicious prompts, or malformed inputs. This “red teaming” approach helps organizations understand where models may fail and what controls are needed.

2. AI Runtime Threat Detection

The platform monitors prompt inputs and model responses in real time to detect and block signs of prompt injection, data leakage, policy violations, or malicious behavior.

4. MLOps Security Integration

By embedding into the MLOps pipeline, PointGuard ensures that model training, deployment, and lifecycle management adhere to security best practices. This includes monitoring for changes to model weights, container images, or configuration drift.

5. Unified Security Correlation

PointGuard doesn’t operate in a silo. Its insights feed into broader SIEM, XDR, and vulnerability management platforms to correlate AI-specific risks with traditional IT and cloud security signals.

Final Thoughts: Securing AI is Securing the Future

The application supply chain is undergoing a transformation. AI is not just an add-on—it’s becoming a fundamental layer of how software is built, deployed, and used. That means we must evolve our security practices to treat AI components with the same scrutiny and governance as any other piece of critical infrastructure.

An AI-BOM is no longer optional. Real-time model monitoring is no longer futuristic. And the convergence of software, cloud, and AI security is no longer theoretical—it’s here.

PointGuard AI offers the tools to meet this moment. It empowers security teams to gain visibility into the unseen, assess risks before they become breaches, and maintain trust in an increasingly AI-powered world.

The future of software is intelligent. Let’s make sure it’s also secure.