* Watch our Video Blog *

Large Language Models (LLMs) like GPT-4 and Claude 3 have become foundational to modern enterprise workflows—from automating customer support to accelerating software development. But as their use expands, so do the security risks. LLMs introduce an entirely new class of vulnerabilities that traditional security frameworks were never designed to handle.

In response, the Open Web Application Security Project (OWASP) created a specialized Top 10 list of security risks tailored to LLMs. With its 2025 update, this list has evolved to reflect real-world attack patterns, including newly documented threats like system prompt leakage, vector poisoning, and excessive agency in autonomous agents.

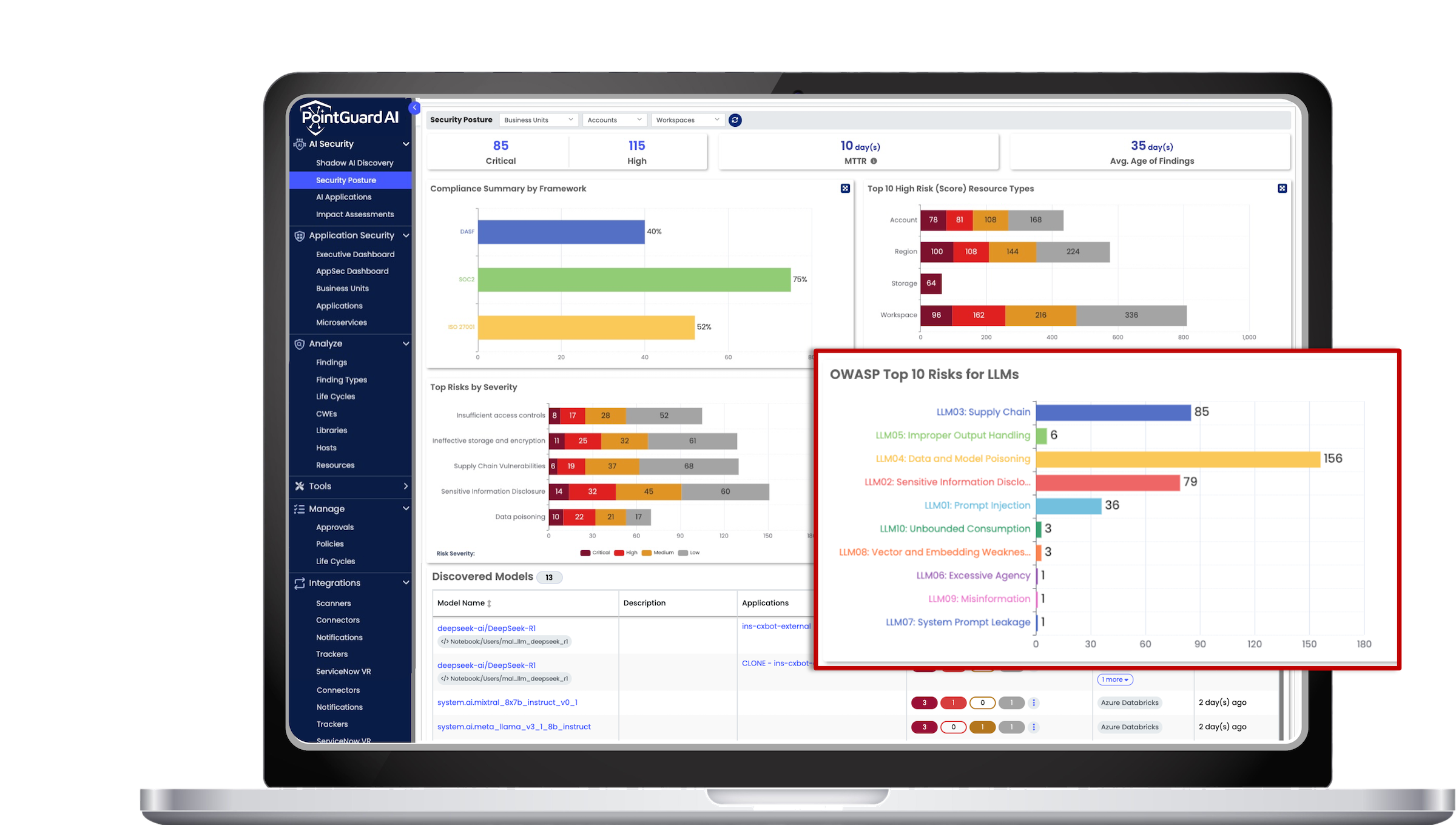

PointGuard AI not only aligns with OWASP’s LLM Top 10 but embeds the framework directly into our product. Each of the ten risks is tracked in real time through dedicated controls, dashboards, and policy automation. Security teams can detect, investigate, and mitigate these risks without needing to translate abstract guidelines into operational workflows. In addition, PointGuard is actively collaborating with OWASP on the development of a new AI Testing Guide, which will provide a practical, community-driven roadmap for evaluating the security and reliability of AI systems. This direct involvement ensures that PointGuard customers benefit from the most current best practices and play a role in shaping the future of AI security standards.

Here is a summary of significant changes with the new 2025 edition of the OWASP Top 10 for Large Language Model (LLM) Applications:

- Unbounded Consumption (LLM10:2025): Previously termed "Denial of Service," this category now encompasses broader issues related to resource management and unexpected costs, which are critical in large-scale LLM deployments.

- Vector and Embedding Weaknesses (LLM08: 2025): A new entry that provides guidance on securing Retrieval-Augmented Generation (RAG) and embedding-based methods, which are now central to grounding model outputs.

- System Prompt Leakage (LLM07:2025): Added to address vulnerabilities where system prompts, previously assumed to be secure, have been exposed, leading to potential exploits.

- Excessive Agency (LLM06:2025): This category has been expanded to reflect the increased use of agentic architectures that grant LLMs more autonomy, highlighting the need for careful oversight to prevent unintended actions.

These updates reflect a deeper understanding of existing risks and the introduction of new vulnerabilities as LLMs become more integrated into real-world applications. The revised list serves as a crucial resource for developers and security professionals to prioritize efforts in identifying and mitigating critical security risks associated with generative AI applications.

Following summary of each of these risks and the recommended mitigation steps to ensure the security and integrity of LLMs.

LLM01:2025 Prompt Injection

- Definition: Malicious actors manipulate an LLM’s input to alter its behavior, potentially leading to unauthorized actions or leakage of sensitive information. These attacks can be direct, where a user explicitly modifies a prompt, or indirect, where external sources like user-generated content stealthily influence the model’s behavior. Such vulnerabilities are particularly dangerous in systems where LLMs interact with automation, APIs, or decision-making processes.

- Explanation: Attackers exploit weaknesses in input validation to bypass security controls, gain unauthorized access, or override system instructions. For example, an attacker could inject a prompt that convinces the model to ignore safety constraints, reveal sensitive data, or execute unintended commands. This is especially concerning in environments where LLMs are integrated into customer service, financial decision-making, or security automation.

- Recommendations: Use robust prompt filtering, input validation, and context-aware prompt handling to detect and neutralize injection attempts. Implement role-based access control (RBAC) to limit who can influence prompts and ensure system prompts remain hidden from user access. Additionally, monitor real-time interactions for anomaly detection to prevent adversarial manipulation.

LLM02:2025 Sensitive Information Disclosure

- Definition: LLMs unintentionally expose sensitive data such as personally identifiable information (PII), financial records, or security credentials. This risk arises from models trained on unfiltered data, where sensitive information may be memorized and reproduced in outputs. Data leaks can lead to legal liabilities, compliance violations, and loss of consumer trust.

- Explanation: Improper handling of training data and response generation can cause LLMs to leak sensitive information. If an LLM has been trained on proprietary documents, confidential emails, or scraped web data, it may inadvertently generate responses that contain names, credit card numbers, API keys, or classified business data. Attackers can even probe models using targeted prompts to extract hidden information.

- Recommendations: Implement strong access controls, encrypt sensitive data, and use differential privacy techniques to reduce memorization risks. Regularly audit model outputs for data leakage by simulating adversarial queries and monitoring for unexpected disclosures. Additionally, apply real-time redaction techniques to filter out sensitive information before delivering responses.

LLM03:2025 Supply Chain Vulnerabilities

- Definition: Third-party components, including pre-trained models and datasets, introduce security risks if compromised or manipulated. LLMs rely on a complex supply chain that includes open-source frameworks, APIs, pre-trained weights, and fine-tuning datasets, all of which can be potential attack vectors. A compromised supply chain can inject backdoors, biases, or even malicious behaviors into deployed models.

- Explanation: Dependency on open-source or third-party LLM models increases risks of poisoning attacks, biased outputs, and system failures due to unverified data sources. Attackers may introduce malicious dependencies or tampered datasets, allowing them to control model outputs or exploit users who interact with the model. Additionally, adversaries can register typos-quatted dependencies that developers mistakenly include in their projects, introducing hidden threats.

- Recommendations: Vet external components thoroughly by using cryptographic checksums, software bills of materials (SBOMs), and signed model artifacts. Implement a zero-trust supply chain security model that continuously verifies data integrity and enforces strict access control for external APIs. Finally, establish continuous monitoring for third-party dependencies and automatically flag untrusted sources.

LLM04:2025 Data and Model Poisoning

- Definition: Attackers introduce malicious data into training sets or fine-tuned models to influence LLM behavior. Poisoned models may exhibit subtle biases, security backdoors, or embedded attack vectors that only trigger under specific conditions. This is a particularly concerning issue in environments where LLMs autonomously generate reports, write code, or assist in cybersecurity operations.

- Explanation: Poisoned datasets can introduce biases, degrade model performance, or create backdoors that can be exploited post-deployment. For example, an attacker could inject malicious training data that causes an LLM to promote false narratives or overlook security vulnerabilities. Model poisoning attacks are difficult to detect because the training data is vast and not always manually reviewed.

- Recommendations: Use anomaly detection techniques and data provenance tracking to ensure datasets remain untampered. Implement secure training pipelines where only verified and cryptographically signed datasets are used for model updates. Additionally, monitor for unexpected model behavior that could indicate poisoning attempts.

LLM05:2025 Improper Output Handling

- Definition: LLM-generated content is not properly validated before being used by downstream applications. Unfiltered model outputs may contain malicious links, incorrect code suggestions, or harmful misinformation. Improper handling can also result in security vulnerabilities if models generate executable commands that are directly used in scripts.

- Explanation: Failing to sanitize LLM outputs can lead to security exploits like code injection, phishing attacks, or regulatory compliance violations. Attackers may craft queries that cause the model to output malicious SQL statements, JavaScript injections, or harmful command-line operations. When an LLM’s output is automatically integrated into applications, these risks multiply.

- Recommendations: Implement strict output validation, sanitization, and execution safeguards before forwarding LLM-generated content. Use whitelisting and sandboxing techniques to prevent the direct execution of model-generated commands. Additionally, train models with reinforcement learning techniques that discourage harmful or misleading responses.

LLM06:2025 Excessive Agency

- Definition: Granting LLMs too much decision-making power can lead to security risks and unintended actions. As LLMs become more integrated into automation, DevOps, and cybersecurity workflows, excessive autonomy may allow them to modify system settings, authorize transactions, or make high-impact changes without human oversight.

- Explanation: Overly autonomous LLMs executing actions without verification may lead to unauthorized transactions, data manipulation, or incorrect enforcement of security policies. For example, an attacker could manipulate a financial chatbot to approve fraudulent transactions by triggering unexpected decision pathways in the model.

- Recommendations: Apply the principle of least privilege, require human-in-the-loop oversight, and restrict high-risk actions that an LLM can perform autonomously. Establish clear accountability measures that track every action taken by AI-driven systems. Implement multi-step verification for any high-impact automated decisions.

LLM07:2025 System Prompt Leakage

- Definition: Unauthorized exposure of system-level prompts or instructions that guide LLM behavior. These prompts contain critical operational logic, safety constraints, and security policies, and their leakage can allow attackers to manipulate the model, bypass restrictions, or extract confidential system configurations. System prompts are often embedded in models to enforce compliance and safety but can sometimes be extracted through clever querying.

- Explanation: Attackers can exploit weaknesses to extract hidden system instructions, revealing model policies, decision logic, and backend configuration settings. This could enable prompt injection attacks, allowing adversaries to override safety mechanisms, disable filtering systems, or access restricted features. If an LLM is used in legal, medical, or financial applications, leaked prompts could expose policy enforcement rules that attackers could exploit to produce fraudulent or unethical outputs.

- Recommendations: Conceal system prompts using encrypted storage, access control mechanisms, and request isolation techniques. Prevent models from directly outputting system instructions by limiting verbose error messages and sanitizing debugging information. Conduct adversarial testing to simulate extraction attacks and refine prompt protection strategies.

LLM08:2025 Vector and Embedding Weaknesses

- Definition: Security vulnerabilities in vector databases and embedding models can lead to manipulation and unauthorized data access. Many modern LLM applications leverage Retrieval-Augmented Generation (RAG) and embeddings to enhance responses with real-time data. If these embeddings are poisoned, manipulated, or improperly secured, attackers can inject harmful content, alter search results, or extract sensitive information from stored embeddings.

- Explanation: Weaknesses in how vectors and embeddings are stored and retrieved in search, recommendation, and AI-driven automation systems may enable adversaries to introduce adversarial inputs, influence model behavior, or manipulate personalized responses. Attackers could craft input sequences that decode hidden data from vector stores or insert harmful embeddings that distort an LLM’s decision-making. If embedding security is neglected, even secure LLMs can be compromised through malicious vector manipulations.

- Recommendations: Implement fine-grained access controls on embedding databases and use encryption to protect stored vectors. Regularly monitor embeddings for anomalies and apply adversarial training to reduce susceptibility to poisoned data. Additionally, restrict untrusted input sources and validate external data before incorporating it into the embedding pipeline.

LLM09:2025 Misinformation and Hallucinations

- Definition: LLMs generate incorrect or misleading information, leading to reputational, legal, and security risks. Unlike traditional software, LLMs do not have fact-checking mechanisms and often generate responses based on probabilities rather than truth. When users overtrust these outputs in critical fields such as medicine, law, or cybersecurity, they may unknowingly rely on false or misleading information.

- Explanation: Hallucinations occur when LLMs confidently generate outputs that sound plausible but are entirely fabricated. This can be exploited by attackers who intentionally mislead LLMs with adversarial inputs, causing them to spread misinformation. Additionally, biased training data, lack of real-time knowledge updates, and ambiguous queries can further exacerbate the risk of generating inaccurate or harmful outputs. Misinformation from LLMs can be used in social engineering attacks, propaganda, or fraud.

- Recommendations: Use truthfulness scoring models, reinforcement learning with human feedback (RLHF), and verification pipelines to fact-check high-stakes LLM outputs. Implement disclaimers for AI-generated content, particularly in critical decision-making applications. Consider integrating trusted knowledge bases and retrieval-based AI architectures to ground responses in factual, up-to-date sources.

LLM10:2025 Unbounded Consumption (Formerly Model Denial of Service)

- Definition: Resource-intensive LLM queries lead to service disruptions, excessive costs, or denial-of-service (DoS) attacks. Unlike traditional web services, LLMs consume significant computational resources, making them susceptible to adversarial queries that exploit high-processing workloads. Attackers can intentionally trigger long, recursive computations or force the LLM to generate excessively large outputs, leading to performance degradation and financial drain.

- Explanation: Attackers may craft inputs that trigger computationally expensive operations, such as self-referential loops, exhaustive search tasks, or brute-force exploitation of complex prompts. In cloud-based LLM deployments, adversaries can cause Denial of Wallet (DoW) attacks, where excessive resource consumption leads to skyrocketing operational costs. This is particularly critical for API-based AI services that charge per-token usage, as attackers can exploit costly inference models to drain organizational budgets.

- Recommendations: Implement rate limiting, execution time constraints, and adaptive throttling to prevent abuse. Enforce cost-aware execution policies that dynamically adjust computational limits based on query complexity. Additionally, deploy anomaly detection systems to monitor for unusual spikes in resource utilization and proactively block abusive request patterns

Conclusion

The OWASP Top 10 for LLMs is more than a list—it’s a security imperative for any organization integrating generative AI into their operations. As models grow more powerful and pervasive, so too do the risks they introduce. From subtle prompt injection exploits to large-scale vector poisoning and autonomous agent misbehavior, today’s LLM-driven applications face a complex and rapidly evolving threat landscape.

Staying ahead requires more than just awareness—it requires action. That’s why PointGuard AI has built OWASP’s LLM Top 10 directly into our platform, providing native support for monitoring, mitigating, and governing these risks across your entire AI ecosystem. And our commitment doesn’t stop there: we are working directly with OWASP to co-develop the upcoming AI Testing Guide, helping define how organizations should validate and secure LLM applications at scale.

With PointGuard, security teams don’t need to reinvent their approach for AI. Instead, they gain access to a purpose-built system that turns high-level frameworks into real-time, operational defenses. Whether you're deploying customer-facing chatbots, internal RAG systems, or complex autonomous agents, PointGuard ensures that your AI remains secure, compliant, and resilient.

.png)